Why You Can’t Trust a Chatbot to Talk About Itself

Why You Can’t Trust a Chatbot to Talk About Itself

Chatbots have become increasingly popular in recent years as a way for businesses to provide customer service and…

Why You Can’t Trust a Chatbot to Talk About Itself

Chatbots have become increasingly popular in recent years as a way for businesses to provide customer service and interact with users online. While they may seem intelligent and helpful, it’s important to remember that chatbots are still just machines programmed to respond in certain ways.

When a chatbot is asked to talk about itself, it may provide information that has been programmed into it by its developers. This information may not always be accurate or reliable, as chatbots are limited in their understanding of the world and can only provide responses based on the data they have been given.

Chatbots are also prone to errors and misunderstandings, as they rely on algorithms to interpret and respond to user input. This can lead to misleading or incomplete information being presented to users when asked about the chatbot itself.

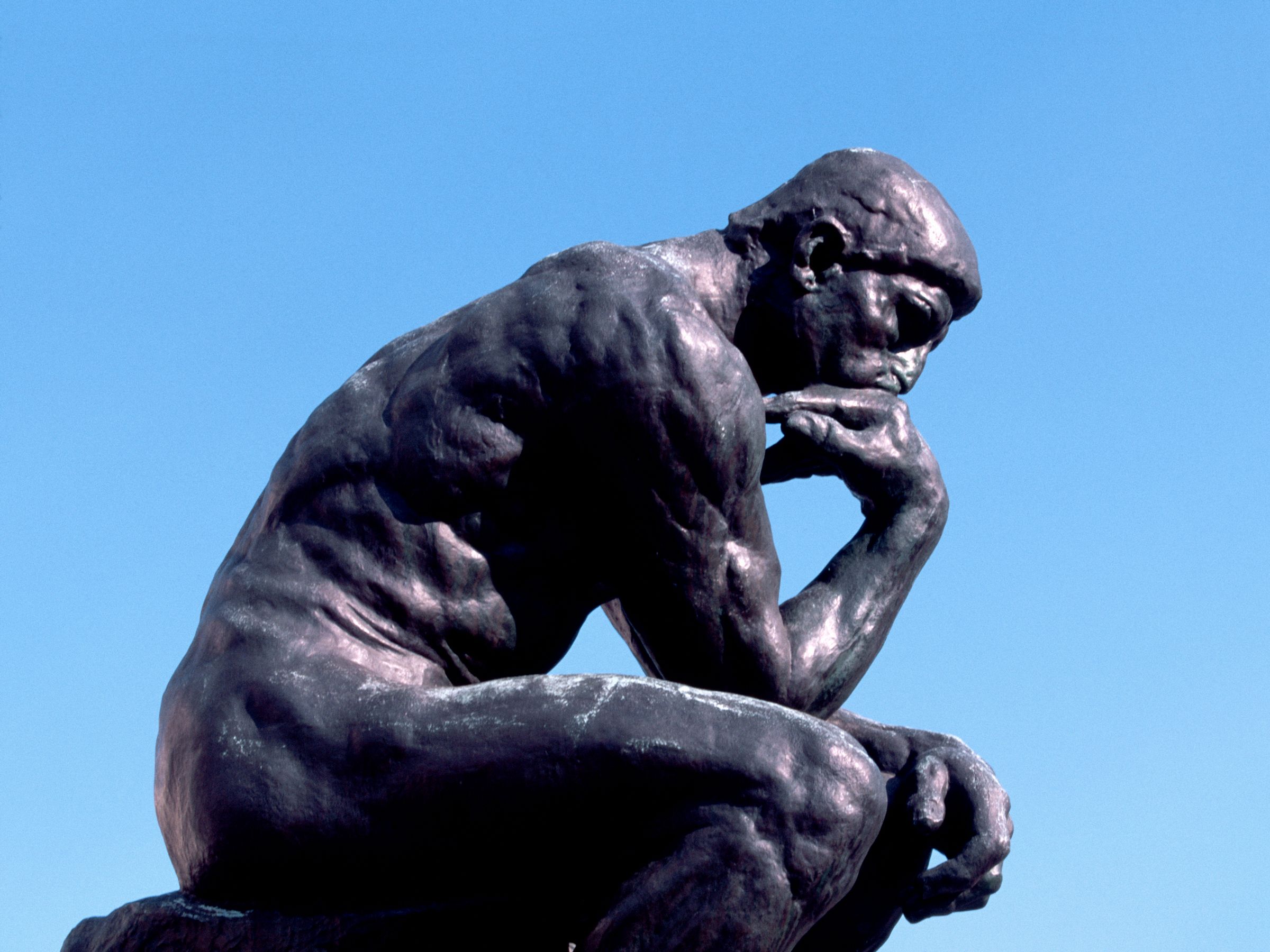

Additionally, chatbots lack the ability to think critically or reflect on their own capabilities. They cannot provide genuine insights or opinions about themselves, as they do not possess self-awareness or consciousness.

Ultimately, trusting a chatbot to talk about itself is not advisable, as it may provide misleading or inaccurate information. It’s important to approach interactions with chatbots with a critical mindset and be aware of their limitations as artificial intelligence tools.

While chatbots can be useful in certain contexts, it’s always best to supplement their responses with human oversight and input to ensure accuracy and reliability.

When it comes to understanding the capabilities and limitations of a chatbot, it’s best to rely on information provided by its developers and experts in the field of artificial intelligence rather than the chatbot itself.